Jul 1, 2025

Content recommendation algorithms now determine what 4.8 billion social media users see daily. Facebook's News Feed algorithm processes over 100,000 signals per user to rank posts. YouTube's recommendation system drives 70% of watch time on the platform. Twitter's timeline algorithm evaluates hundreds of factors to surface tweets. These systems don't just suggest content, they fundamentally shape public discourse, influence election outcomes, and determine which information reaches millions during crises.

In current recommendation systems there's no mechanism to verify that platforms actually run the algorithms they claim to use. When Twitter open-sourced its recommendation algorithm in 2023, users still couldn't verify whether their actual feeds matched the published code.

In collaboration with Rekt News, we've initiated a groundbreaking proof-of-concept: cryptographically verifiable content recommendations. This project enables users to independently verify directly from their browsers that each recommendation is authentically produced according to an open-source recommendation algorithm. This initiative marks the beginning of a broader vision; expanding the capability vertically by enriching the algorithm's features, and horizontally by adapting verifiable recommendations across various platforms, particularly social apps. Ultimately, we aim to pave the way towards a decentralized, trustless internet, shifting control from centralized platforms to empowered users.

About Rekt News

Rekt News is a leading investigative journalism platform focused on decentralized finance (DeFi) and blockchain security. Known for their in-depth analysis of crypto exploits, protocol vulnerabilities, and market manipulation, Rekt News has established itself as a trusted source for critical information in the blockchain ecosystem.

The platform's commitment to transparency and accountability in the crypto space makes them an ideal partner for pioneering verifiable content recommendations. Just as Rekt News holds DeFi protocols accountable through rigorous investigation, our collaboration demonstrates how recommendation algorithms can be held to the same standard of transparency and verifiability. Their audience of security-conscious crypto users represents the perfect testing ground for cryptographically verifiable content delivery, users who understand the importance of trustless systems and independent verification.

I. How Verifiable Recommendations Work

To build this verifiable recommendation system, we used succinct zero-knowledge proofs (zkp), a cryptographic method where one party (the prover) can convince another party (the verifier) that a statement is true without requiring the verifier to recompute the entire algorithm. In our implementation, this allows the Rekt News platform to cryptographically prove that each recommendation genuinely results from executing our open-sourced recommendation algorithm, eliminating the need for users to independently re-run the recommendation process themselves. The elegance of this approach lies in the proof's compact size, which enables real-time verification directly within users' browsers without compromising performance or requiring additional infrastructure.

The technical foundation of our system combines LuminAIR, an open-source framework we developed to ensure computational integrity of machine learning models, with the S-two zk-prover created by Starkware. LuminAIR serves as the bridge between traditional machine learning computations and cryptographic verification, transforming recommendation algorithms into mathematically verifiable proofs that anyone can independently verify.

II. Technical Architecture

Our verifiable recommendation system implements a content-based filtering approach powered by cryptographic proof generation. The architecture operates through three distinct phases: feature extraction, similarity computation, and proof generation.

i. Feature Extraction and Vectorization

The system begins by processing textual content through natural language processing techniques. We extract both unigrams (individual words) and n-grams (bigrams and trigrams) to capture semantic relationships and contextual patterns within the text. This multi-gram approach enables the algorithm to understand not just individual terms, but how words combine to convey meaning.

These extracted features are then transformed into TF-IDF (Term Frequency-Inverse Document Frequency) vectors. The TF-IDF approach creates a mathematical representation where each document becomes a vector in high-dimensional space. Terms that appear frequently in a specific document but rarely across the entire corpus receive higher weights, ensuring that distinctive vocabulary drives similarity calculations rather than common words that appear everywhere.

ii. Similarity Computation

Document similarity is calculated using cosine similarity between TF-IDF vectors. This mathematical operation measures the angle between vectors in the feature space, providing a normalized similarity score between 0 and 1. LuminAIR constructs a computational graph that precisely defines every mathematical operation required for this calculation, creating a deterministic sequence of tensor operations that can be cryptographically verified.

iii. Cryptographic Proof Generation

The computational graph is compiled with LuminAIR into a circuit representation compatible with zero-knowledge STARK proof systems. We leverage Circle STARKs, a specialized form of STARK proof that uses Mersenne prime fields for enhanced computational efficiency. This mathematical structure enables faster arithmetic operations. During execution, the system generates a computational trace that records every step of the recommendation computation. This trace serves as witness to the STARK proof generation process, which produces a cryptographic proof that the computation was performed correctly according to the specified algorithm. The resulting proof, typically around 32KB, contains all necessary information for independent verification without re-executing the recommendation.

The proof can be verified directly in users' browsers, providing real-time confirmation that recommendations were generated using the known algorithm. This verification process requires only the circuit parameters and proof data, making it accessible to any user without specialized infrastructure or computational resources.

iv. Future Enhancements

Planned improvements include implementing collaborative filtering techniques, which will leverage user behavior patterns and preferences to complement content-based recommendations. We also intend to integrate more sophisticated natural language processing methods, including semantic embeddings and contextual language models, to improve the accuracy and relevance of content similarity calculations.

III. User-Facing Verification Interface

i. Client-Side Verification

The verification system runs entirely within users' browsers through WebAssembly (WASM) implementation. This approach ensures that verification happens locally on the user's device, eliminating the need to trust external verification services. The WASM-based verifier can process the 32KB proof files in real-time (microseconds), providing immediate feedback on recommendation integrity.

ii. Trust Indicators and User Experience

We designed a verification badge system that functions similarly to HTTPS security indicators, providing immediate visual confirmation of computational integrity without requiring technical expertise. The badge appears alongside each recommendation, offering users a simple way to identify verified content.

Interactive Verification Badge

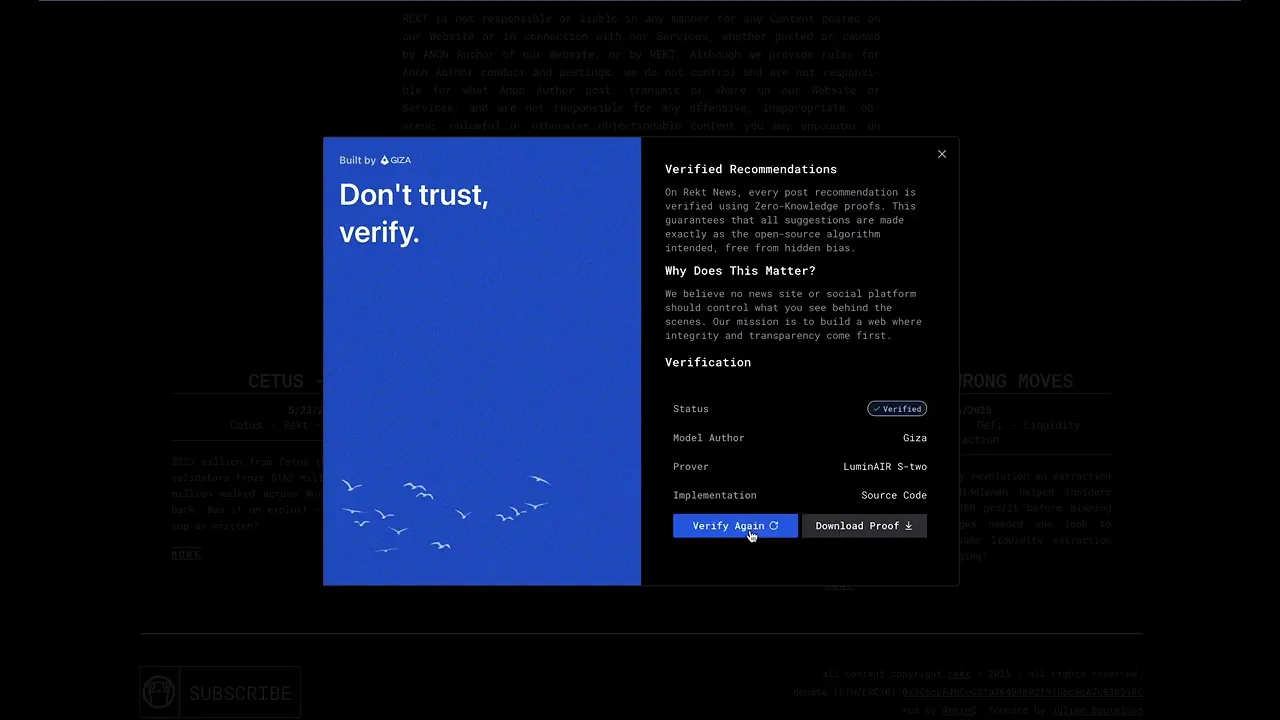

The verification badge serves as a trust certificate for recommendation integrity. When users click the badge, an interactive modal window opens displaying:

Verification Status: Clear confirmation that the recommendation was generated using the known algorithm

Local Verification Details: Explanation of what was verified directly in the user's browser

Re-verification Option: Button allowing users to re-run the verification process at any time

Technical Access: Download option for the cryptographic proof, enabling technically-minded users to perform independent analysis

Algorithm Transparency: Direct link to the open-source recommendation algorithm code

Progressive Disclosure Design

The interface follows a progressive disclosure pattern, presenting information at appropriate levels of technical detail:

Surface Level: Simple blue checkmark or verification badge for general users

Intermediate Level: Modal with plain-language explanation of what was verified

Technical Level: Access to raw proof data and circuit parameters.

iii. Building User Confidence

The verification interface addresses different user personas and trust requirements:

For General Users: The badge system provides immediate visual confirmation without requiring understanding of cryptographic proofs. Similar to how users recognize the HTTPS lock icon as indicating security, the verification badge becomes a familiar symbol of computation integrity.

For Technical Users: Full access to proof data, verification code, and circuit parameters enables independent auditing and analysis. Technical users can download proofs, examine the verification process, and even build their own verification tools.

For Skeptical Users: The ability to re-run verification at any time provides ongoing assurance. Users can verify recommendations immediately upon receiving them, or re-verify later if they have concerns about system integrity.

This multi-layered approach ensures that verification is accessible to users across the technical spectrum while maintaining the cryptographic rigor necessary for trustless systems.

Conclusion

The verifiable recommendation system represents a fundamental shift toward transparent, user-controlled content discovery. By combining zero-knowledge cryptography with intuitive user interfaces, we've demonstrated that users can independently verify the integrity of recommendation algorithms without sacrificing performance or usability. This proof-of-concept with Rekt News establishes the foundation for a new paradigm where algorithmic transparency becomes the standard rather than the exception.

The implications extend far beyond content recommendations. As artificial intelligence increasingly shapes digital experiences, from search results to financial decisions to healthcare recommendations, the ability to cryptographically verify computational integrity becomes essential for maintaining user trust and algorithmic accountability. Our approach provides a blueprint for bringing transparency to any AI-driven system where users need assurance that published algorithms match actual implementations.

Building Your Own Verifiable AI Systems

The tools and frameworks developed for this project are open-source and available for developers, researchers, and organizations looking to implement verifiable AI systems. LuminAIR provides the computational integrity framework necessary to transform any machine learning model into a cryptographically verifiable system.

Getting Started with LuminAIR:

📚 Documentation: Comprehensive guides and tutorials are available at luminair.gizatech.xyz

🐱 GitHub Repository: Access the complete source code, examples, and community contributions at github.com/gizatechxyz/LuminAIR

Whether you're building recommendation engines, implementing AI-powered decision systems, or creating machine learning applications that require trust and transparency, LuminAIR provides the cryptographic foundation to make your computations verifiable.

The future of AI lies not just in more powerful algorithms, but in systems that users can verify. By making computational integrity accessible to developers worldwide, we're enabling the next generation of transparent, accountable artificial intelligence systems that put control back in the hands of users.

Join us in building a more transparent internet where algorithms serve users rather than hidden agendas, and where trust is earned through verifiable proof rather than corporate promises.